Since I first started maintaining blog posts on handling spatial data in R perhaps the most common question I’ve received is “How do I handle big spatial data in R?”. I thought its finally time to provide a blog post to deal with this particular topic. The answer of course is that there are many, many ways.

Now I know those of you who have asked me in person are thinking “That’s not what he said when I asked?”, he said “That depends on what you mean by big spatial data?”, which was cryptic and unhelpful. I hope this blog post convinces you that both are valid answers and need to be unpacked together.

Note that for now I’ll address raster data only, but I hope to add to this post (or create another) for vector data. Either way, many of the principals I’ll demonatrate apply for any R coding exercise no matter what the data or objective. For those needing to work with large vector data I recommend you have a look at Simple Features for R - library(sf).

I should point out before we get started that while some of what I cover here may help speed up processing, that is not the focus of this post. My goal here is to help you avoid slowing yourself down. I will not cover topics like parallel processing (although I touched on it in my previous post) or processing rasters in chunks - for the latter see this vignette for library(raster).

The Basics

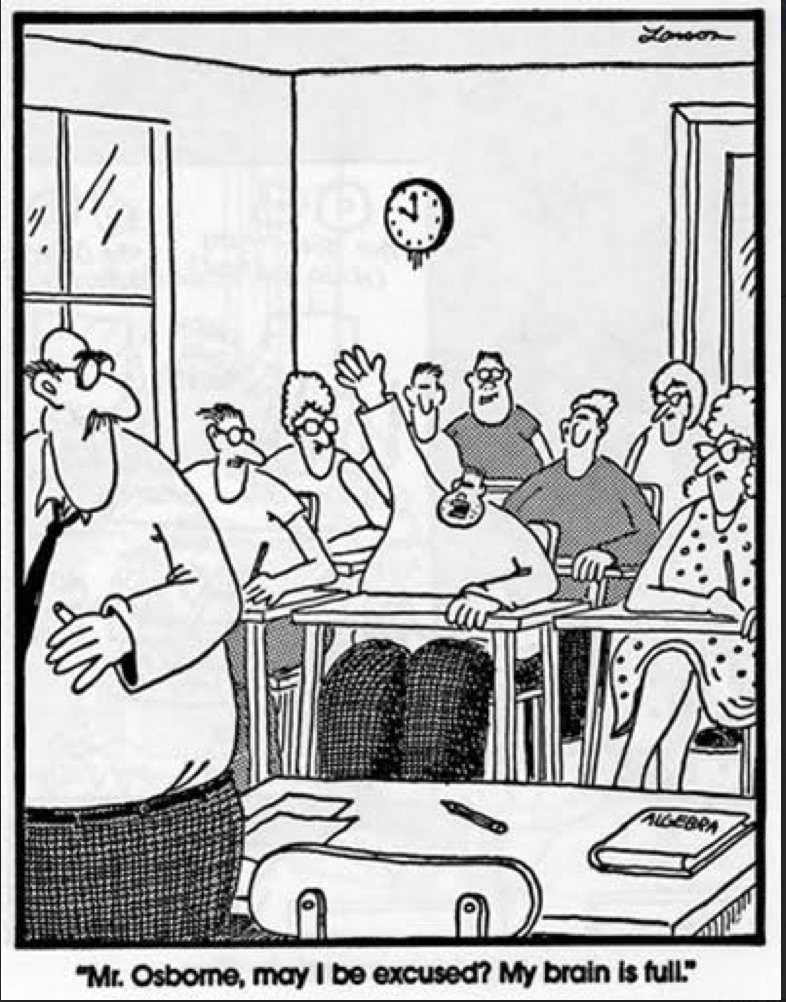

The issue is memory (RAM). Think of it as brain capacity, and if R’s brain is full, things start to happen slowly or not at all. The goal is somewhat like Taoism - keeping the mind clear of all distractions and just being - placing our will in harmony with the natural univeRse.

Sorry, I’ll avoid all philosophy from here ;) Note that I am also going to avoid complexities and will try to use laymens terms. This may require some abstractions of the truth that could fill whole blogs with caveats and explanations, so I’ll just ask advanced R users to please forgive me and turn a blind eye. I hope some of what I’ll demonstrate here is useful for advanced users, but otherwise I’d recommend reading resources like Hadley Wickham’s section on the way in which R uses memory in his book Advanced R as it really can help you improve your code to manage memory better.

Let’s start by seeing how full R’s brain is before we’ve asked it to think about anything.

ls() #lists all objects in memory## character(0)library(pryr)

mem_used() #gives estimate of memory being used## 33.2 MBNo objects in memory, and not much being used! Note that there’s always something happening so mem_used() will never return 0. I’ll keep calling the mem_used() function throughout to show you how different operations affect how full R’s brain is.

Now let’s see what happens when we add a raster. Note that I’ll start by using the SRTM90 digital elevation model used in the Handling Spatial Data in R - #2 post, which you can download here if you don’t have it already. It’s not a particularly big raster, but it’ll suffice for the purposes of demonatration.

I’ll also use the working directory tricks I explain in the Housekeeping section of that post. Note the addition of tempwd.

if (Sys.getenv("USER")=='jasper') {datwd="/Users/jasper/Dropbox/SAEON/Training/SpatialDataPrimer/Example/Data/"

giswd="/Users/jasper/Documents/GIS/"

reswd="/Users/jasper/Dropbox/SAEON/Training/SpatialDataPrimer/Example/Results/"

tempwd="/Users/jasper/Dropbox/SAEON/Training/SpatialDataPrimer/Example/temp/"}

if (Sys.getenv("USER")=='MACUseR') {datwd=""; giswd=""; reswd=""} #For Mac/Linux users

if (Sys.getenv("USERNAME")=='WINDOZEUseR') {datwd=""; giswd=""; reswd=""} #For Windows

mem_used()## 33.6 MBlibrary(raster, quietly = T)##

## Attaching package: 'raster'## The following object is masked from 'package:pryr':

##

## subsdem <- raster(paste0(giswd, "SRTM/srtm_40_19.tif"))

mem_used()## 87.1 MBSo adding the directory file paths made almost no difference, but the raster is occupying ~50MB! But hang on… I recall that file being larger when I downloaded it. Let’s check?

file.size(paste0(giswd, "SRTM/srtm_40_19.tif"))/10^6 #Note the "/10^6" to convert to MB## [1] 72.12069The real file is bigger? This is because the raster() function doesn’t call the whole file into memory. It creates a link to the file and only calls the info it needs to manipulate it. This is very clever (thanks Robert Hijmans!), and avoids R’s brain getting too full.

Ok, so what if we create a new object based on the object that is linked to file?

newdem <- dem

mem_used()## 87.2 MBIt hardly changed the memory usage? The reason being that R is very very clever, and knows that newdem is identical to dem in all but name, so all the newdem object is storing is enough info to manipulate it and it’ll call what it needs from dem (or directly from the source file) when it needs it.

Where we start to get into trouble is when we start to do stupid things like…

newdem <- dem*2

mem_used()## 376 MBWhoa?! ~300MB of brain space filled by one operation!!!

The issue is that by multiplying dem by 2 we’ve created an object with different properties to the source file, so R has to keep it somewhere, and since you didn’t tell R where to store it on your computer it has to remember it. Let’s compare the properties of dem and newdem.

dem## class : RasterLayer

## dimensions : 6001, 6001, 36012001 (nrow, ncol, ncell)

## resolution : 0.0008333333, 0.0008333333 (x, y)

## extent : 14.99958, 20.00042, -35.00042, -29.99958 (xmin, xmax, ymin, ymax)

## coord. ref. : +proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0

## data source : /Users/jasper/Documents/GIS/SRTM/srtm_40_19.tif

## names : srtm_40_19

## values : -32768, 32767 (min, max)newdem## class : RasterLayer

## dimensions : 6001, 6001, 36012001 (nrow, ncol, ncell)

## resolution : 0.0008333333, 0.0008333333 (x, y)

## extent : 14.99958, 20.00042, -35.00042, -29.99958 (xmin, xmax, ymin, ymax)

## coord. ref. : +proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0

## data source : in memory

## names : srtm_40_19

## values : -56, 4464 (min, max)See how the “data source” for dem is a file path, whereas for newdem it says “in memory”.

This isn’t a problem if the file is small, but it is a problem for big files or the accumulation of many smaller ones. If you’re working with big files, a better alternative to the code above would be to write out the file and create a link to it. Let’s put it in the tempwd we created earlier.

newdem <- writeRaster(dem*2, paste0(tempwd, "newdem"))

mem_used()## 88.6 MBWow!

But hang on? It seems like newdem is much smaller than dem, which was ~50MB? Surely they should be similar? Let’s look at their properties again.

dem## class : RasterLayer

## dimensions : 6001, 6001, 36012001 (nrow, ncol, ncell)

## resolution : 0.0008333333, 0.0008333333 (x, y)

## extent : 14.99958, 20.00042, -35.00042, -29.99958 (xmin, xmax, ymin, ymax)

## coord. ref. : +proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0

## data source : /Users/jasper/Documents/GIS/SRTM/srtm_40_19.tif

## names : srtm_40_19

## values : -32768, 32767 (min, max)newdem## class : RasterLayer

## dimensions : 6001, 6001, 36012001 (nrow, ncol, ncell)

## resolution : 0.0008333333, 0.0008333333 (x, y)

## extent : 14.99958, 20.00042, -35.00042, -29.99958 (xmin, xmax, ymin, ymax)

## coord. ref. : +proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0

## data source : /Users/jasper/Dropbox/SAEON/Training/SpatialDataPrimer/Example/temp/newdem.grd

## names : srtm_40_19

## values : -56, 4464 (min, max)This confirms that newdem is now linked to a file, but also that the objects have the same extent and resolution as we expected. Only the pixel values differ, but that should not make a big difference?

It turns out that the difference ooccurs because by writing out the raster without indicating a file extention or format R has used the native format for library(raster), which in your file system creates the files “newdem.grd” and “newdem.gri” in your tempwd folder. This file format was designed specifically so that R can create a link calling all the info it needs to manipulate the file while minimizing memory usage. The memory required for R to create a link to raster files varies quite a bit between file formats (.asc, .sdat, .img, etc) and it turns out that GeoTiffs (.tif) like the SRTM data we called earlier are particularly bad.

I know what you’re thinking - “This is cool to know, but adding newdem <- writeRaster() to each line of code where I manipulate a raster is cumbersome and untidy”. Fortunately, Prof R.J. Hijmans has provided a solution for this in most cases by including the “filename =” option in most functions. To do the calculation above we could use the calc() function and rewrite it like so…

newdem <- calc(dem, fun=function(x){x * 2}, filename = paste0(tempwd, "newdem"), overwrite=TRUE)

mem_used()## 89.5 MBIn this particular case the code is still more cumbersome than simple raster maths, but in most cases all you’d need add is the “filename = paste0(tempwd,”newdem“)” bit. Note I had to add “overwrite=TRUE” too, otherwise R returns an error. This may seem irritating, but it does save one from embarrasing scenarios like overwriting your source data if you just copied and pasted the filepath and forgot to change it…

Ok, ok, I hear you! So adding “filename = …, overwrite = …” etc every time is cumbersome and irritating too. You may as well go and pay ESRI lotsa $ and click away in ArcGIS?

Well it turns out that Prof Bobby J. H. has created a way for eternally lazy typers like you to solve this, but with great power comes great responsibility! The solution is rasterOptions().

rasterOptions()## format : raster

## datatype : FLT4S

## overwrite : FALSE

## progress : none

## timer : FALSE

## chunksize : 1e+07

## maxmemory : 1e+09

## tmpdir : /var/folders/93/lxzmv0k90xs0lhsgwtdt5s640000gn/T//RtmpgAaSV7/raster//

## tmptime : 168

## setfileext : TRUE

## tolerance : 0.1

## standardnames : TRUE

## warn depracat.: TRUE

## header : noneFirstly, you’ll notice that “overwrite = FALSE” is the default. You can change this at your own peril. I do not advise it.

Secondly, if you look at the help file with ?rasterOptions, you’ll also note a setting called “todisk”, which by default is set to FALSE. If you set this to TRUE, all operations that create new rasters will write the new raster to disk as a temporary file and the raster object in memory will be linked to that file.

Neat? Not really. The problem with this solution is that all raster operations will now involve reading and writing files, even if the rasters are small. Reading and writing operations take time, and if you have lots of small file operations this will probably slow you down more than if you just ask R to remember all the small files…

In short, I’d advocate just typing “filename = …, overwrite = …” when you need it.

Interlude

To carry on with the irritating mind-reading, I bet you’ve come around to thinking “Well if the goal is to keep everything out of R’s memory, why are we trying to do GIS in R at all?”

My answer is “Exactly! You’re starting to get it!!!” We’re not trying to do GIS in R, we’re trying to do GIS from R!!!

If you’re reading this blog, you’re likely already sold on the value of working in R for a variety of other reasons including things like statistical flexibility, visualization tools, reproducibility, etc. You’re probably only interested in handling spatial data in R because you’re sick of clicking on stuff in ArcGIS or QGIS and want to do everything you need in one workflow.

The beauties are 1) if you’re familiar with R, learning to handle spatial data with R is not that tricky, and 2) you can do massively powerful spatial data analyses from R, because if the internal* functions can’t do it, R can call just about whatever software you need to get the job done!

*Here I mean functions like those in library(raster), but I should add the proviso that this and many other R libraries are already cleverly designed to call other software to do their bidding wherever it is deemed more efficient to do so.

Doing GIS from R

In the past few years I have started working with very large datasets like the 30m National Land Cover Data for South Africa and the full record of daily or 16-day composite MODIS images for the Cape Floristic Region. As you would imagine, I have started to come up against some computational issues and have had to find ways around them since just getting a bigger computer is not always a viable option.

One of my best finds has been library(gdalUtils), which provides wrapper functions for GDAL (Geospatial Data Abstraction Library). GDAL is a massively powerful software library (written in C and C++) for reading and writing raster and vector geospatial data formats, and includes many command line utilities for data translation and processing. It actually forms the basis of many (if not most) commonly used GIS programmes like QGIS, GRASS, SAGA, IDRISI, etc - here’s a list!

Here’s a quick demonstration calling on GDAL to project our DEM to UTM34S, using library(gdalUtils)’ gdalwarp() wrapper function.

mem_used()## 89.7 MBlibrary(gdalUtils)

gdalwarp(paste0(giswd, "SRTM/srtm_40_19.tif"),

dstfile=paste0(tempwd, "srtm_UTM34S"),

t_srs="+proj=utm +zone=34 +south +ellps=WGS84 +datum=WGS84 +units=m +no_defs",

output_Raster=TRUE,

overwrite=TRUE,verbose=TRUE)## Checking gdal_installation...## Scanning for GDAL installations...## Checking Sys.which...## Checking common locations...## GDAL version 1.11.4## GDAL command being used: "/Library/Frameworks/GDAL.framework/Versions/1.11/Programs/gdalwarp" -overwrite -t_srs "+proj=utm +zone=34 +south +ellps=WGS84 +datum=WGS84 +units=m +no_defs" -of "GTiff" "/Users/jasper/Documents/GIS/SRTM/srtm_40_19.tif" "/Users/jasper/Dropbox/SAEON/Training/SpatialDataPrimer/Example/temp/srtm_UTM34S"## class : RasterBrick

## dimensions : 6654, 5693, 37881222, 1 (nrow, ncol, ncell, nlayers)

## resolution : 85.7311, 85.7311 (x, y)

## extent : -79261.36, 408805.8, 6110386, 6680840 (xmin, xmax, ymin, ymax)

## coord. ref. : +proj=utm +zone=34 +south +datum=WGS84 +units=m +no_defs +ellps=WGS84 +towgs84=0,0,0

## data source : /Users/jasper/Dropbox/SAEON/Training/SpatialDataPrimer/Example/temp/srtm_UTM34S

## names : srtm_UTM34S

## min values : -32768

## max values : 32767mem_used()## 93.1 MBVery little memory taken, because nothing ever came into R’s memory.

Now let’s compare the time taken to reproject our DEM using library(raster)’s projectRaster() function versus gdalwarp().

system.time(

gdalwarp(paste0(giswd, "SRTM/srtm_40_19.tif"),

dstfile=paste0(tempwd, "srtm_UTM34S"),

t_srs="+proj=utm +zone=34 +south +ellps=WGS84 +datum=WGS84 +units=m +no_defs",

output_Raster=TRUE,

overwrite=TRUE,verbose=TRUE)

)## Checking gdal_installation...## Scanning for GDAL installations...## Checking the gdalUtils_gdalPath option...## GDAL version 1.11.4## GDAL command being used: "/Library/Frameworks/GDAL.framework/Versions/1.11/Programs/gdalwarp" -overwrite -t_srs "+proj=utm +zone=34 +south +ellps=WGS84 +datum=WGS84 +units=m +no_defs" -of "GTiff" "/Users/jasper/Documents/GIS/SRTM/srtm_40_19.tif" "/Users/jasper/Dropbox/SAEON/Training/SpatialDataPrimer/Example/temp/srtm_UTM34S"## user system elapsed

## 1.704 0.619 3.205system.time(

demUTM <- projectRaster(dem, crs = CRS("+proj=utm +zone=34 +south +ellps=WGS84 +datum=WGS84 +units=m +no_defs"), filename = paste0(tempwd, "newdem"), overwrite=TRUE)

)## user system elapsed

## 60.521 82.914 264.069Phew! Well that was painful… I think it’s pretty clear that gdalwarp() was MUCH more efficient! Note that you can also use gdalwarp() to read the raster into R (by just assigning it “myobject <- gdalwarp(…)”). This does slow it down, but only by a couple of seconds. If you have a set of different operations you’d like to perform it’s quickest to run them line by line - writing new files from GDAL each time - and then only read in the last file.

Note that to give projectRaster() a fair chance, I’ve tried it with and without “filename =” and with and without creating the new demUTM object and the results were very similar.

I’ll leave it there. Have fun exploring other library(gdalUtils) functions! Note that the are similar R packages with wrapper functions for GRASS and SAGA, and probably others, but I’ll leave exploring those up to you too.

Before we go, lets delete all our temporary files.

file.remove(list.files(tempwd, full.names = T)) #remove all files from our temp folder## [1] TRUE TRUE TRUEAnd of course…

sessionInfo()## R version 3.5.1 (2018-07-02)

## Platform: x86_64-apple-darwin15.6.0 (64-bit)

## Running under: macOS High Sierra 10.13.6

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/3.5/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/3.5/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_GB.UTF-8/en_GB.UTF-8/en_GB.UTF-8/C/en_GB.UTF-8/en_GB.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] gdalUtils_2.0.1.14 raster_2.6-7 sp_1.3-1

## [4] pryr_0.1.4

##

## loaded via a namespace (and not attached):

## [1] Rcpp_0.12.18 knitr_1.20 magrittr_1.5

## [4] lattice_0.20-35 foreach_1.4.4 stringr_1.3.1

## [7] tools_3.5.1 rgdal_1.3-4 grid_3.5.1

## [10] xfun_0.3 R.oo_1.22.0 htmltools_0.3.6

## [13] iterators_1.0.10 yaml_2.2.0 rprojroot_1.3-2

## [16] digest_0.6.15 bookdown_0.7 R.utils_2.6.0

## [19] codetools_0.2-15 evaluate_0.11 rmarkdown_1.10

## [22] blogdown_0.8 stringi_1.2.4 compiler_3.5.1

## [25] R.methodsS3_1.7.1 backports_1.1.2